One of the best parts of my job is having the opportunity to work with some amazing customers who are putting together innovative solutions in the cloud. In this post, I’d like to share some details about a recent engagement that taught me a lot about Azure Functions, scale, and other related topics.

The source code for this solution can be found at: https://github.com/dbarkol/functions-scale-test

The Challenge

For this project, our customer had a very interesting set of goals:

- Retrieve the most recent, up-to-date forecast information for 500,000 locations in the United States by calling an API hosted on weather.com.

- Complete the process in under 5 minutes.

- Repeat every 15 minutes.

In the end, with the help of a great team, we were able to accomplish this in under 3 minutes! Here is how we put it together….

Overall Design

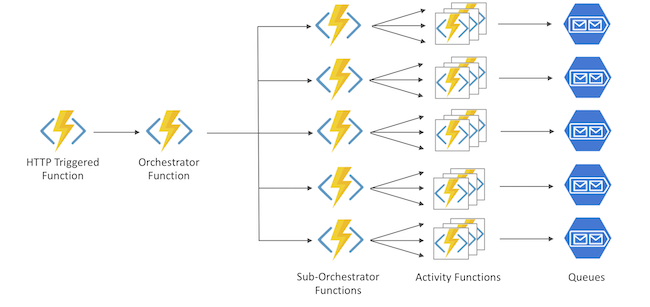

At a very high-level this diagram represents the overall flow:

- Coordinates are stored in Azure Table Storage – it’s cheap, easy to work with and fast (as long as you partition efficiently). Since the records are not going to change, this is only done once.

- When the process begins, Durable Functions are used to populate a group of Storage Queues with the coordinates from the table. Each message represents a pending request to the weather service.

- An Azure Function is invoked for each message in the queue.

- Requests are made to the 3rd party service to get the forecast details.

- The results of the forecast requests are published to Event Hubs for further processing.

What about batching calls to weather.com?

One of the first challenges we encountered was that the weather.com API provided to the customer did not support batching requests. This means that each location (latitude and longitude coordinates) requires its own request to the API.

Load-Balancing

The initial design leveraged a single storage queue and Function App. After some testing, it quickly became apparent that the bottleneck was the single Function App that processed all the messages. Even though the scale for the queue-triggered function was fairly quick, it didn’t meet the goal of completing all 500,000 requests in under 5 minutes.

To address this, the load must be balanced across multiple queues and Function Apps. Therefore, each queue will have a dedicated Function App and only a subset of the records to process. For example, the first queue is responsible for records 1-100,000. Subsequently, the second queue will be populated with the next 100,000 records from the table, and so on. This basic load-balancing exercise distributes the work across multiple services that can now run in parallel.

Ultimately, this approach significantly increases the throughput and rate of requests made to the weather service.

Calling the Weather.com API

The Weather Company has a set of premium APIs for their enterprise customers that allows them to retrieve forecast information in a variety of ways. Obtaining an API key for this advanced analytics service is something that requires a content licensing agreement with The Weather Company.

The function that processes each message is extremely simple. It is nothing more than a queue-triggered function with an output binding to an Event Hub. Even though we can only make one request to the weather API at a time, the function anticipates the message payload to contain a collection of coordinates, just in case batching is supported in the future.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| using System; | |

| using System.Threading.Tasks; | |

| using System.Net.Http; | |

| using Microsoft.Azure.WebJobs; | |

| using Microsoft.Extensions.Logging; | |

| using System.Collections.Generic; | |

| using Models; | |

| using Newtonsoft.Json; | |

| namespace ForecastProcessor | |

| { | |

| public static class GetForecasts | |

| { | |

| #region Private Data Members | |

| // It is important that we instantiate this outside the scope of the function so | |

| // that it can be reused with each invocation. | |

| private static readonly HttpClient Client = new HttpClient(); | |

| // Weather.com API key | |

| private static readonly string ApiKey = System.Environment.GetEnvironmentVariable("WeatherApiKey"); | |

| #endregion | |

| [FunctionName("GetForecasts")] | |

| public static async Task Run( | |

| [QueueTrigger("%QueueName%", Connection = "QueueConnectionString")]Coordinates[] items, | |

| [EventHub("%EventHubName%", Connection = "EventHubsConnectionString")] IAsyncCollector<Forecast> results, | |

| ILogger log) | |

| { | |

| log.LogInformation("GetForecasts triggered"); | |

| // Iterate through the collection of coordinates, retrieve | |

| // the forecast and then pass it along. | |

| foreach (var c in items) | |

| { | |

| // Format the API request with the coordinates and API key | |

| var apiRequest = | |

| $"https://api.weather.com/v1/geocode/{c.Latitude}/{c.Longitude}/forecast/fifteenminute.json?language=en-US&units=e&apiKey={ApiKey}"; | |

| // Make the forecast request and read the response | |

| var response = await Client.GetAsync(apiRequest); | |

| var forecast = await response.Content.ReadAsStringAsync(); | |

| log.LogInformation(forecast); | |

| // Send to an event hub | |

| await results.AddAsync(new Forecast | |

| { | |

| Longitude = c.Longitude, | |

| Latitude = c.Latitude, | |

| Result = forecast | |

| }); | |

| } | |

| } | |

| } | |

| } |

Generating the Load

Now comes the most challenging part – generating the load for the Function Apps to process. Remember, the goal is to distribute 500,000 records evenly across several queues (5), every 15 minutes. We want this done as quickly as possible – that’s where Durable Functions and the Fan-out/Fan-in pattern come in.

A quick breakdown of the load generator:

- It begins with a normal HTTP triggered function that we can call manually or set a recurrence for.

- An orchestrator function is invoked to begin the workflow.

- The orchestrator function calls 5 sub-orchestrator functions, one for each queue.

- The sub-orchestrators spin up a large set of activity functions that will retrieve records from the table and send messages to their respective queues.

All the code for the orchestrators and activity functions reside in this static class: https://github.com/dbarkol/functions-scale-test/blob/master/ForecastGenerator/SendCoordinates.cs.

Partitioning

Reading from Azure Tables can be extremely quick as long you leverage partitions effectively. This awesome post from Troy Hunt (a bit outdated but still very relevant) highlights the importance of partitions when working with tables: https://www.troyhunt.com/working-with-154-million-records-on/.

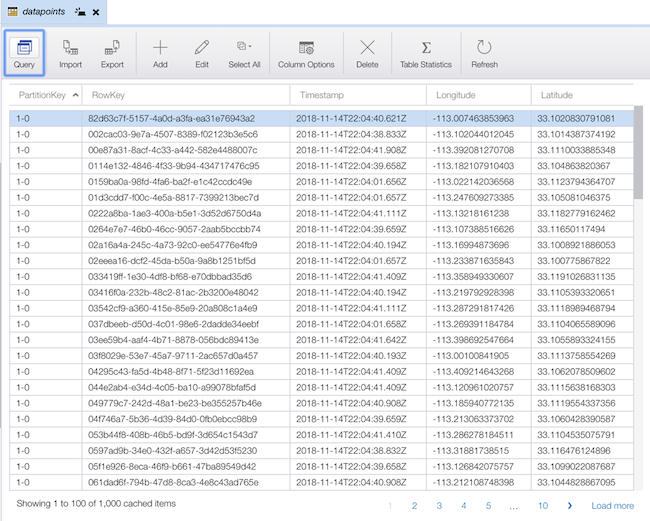

When putting this together I didn’t realize that you could only retrieve 1,000 records at a time from a table. This forced me (in a good way) to come up with a simple partitioning strategy. In short, every set of 1,000 records was given a unique partition key that had the following naming convention: {queue-number}-{partition-count}.

So the first 1,000 records for queue #1 would have the partition key 1-0. The next 1,000 records would be assigned the key 1-1. The following screenshot highlights some of these records in Azure Storage Explorer:

Now, when the coordinates are retrieved by the activity functions, they return 1,000 records at a time.

Azure Service Limits and Design Considerations

Looking back on this project, one of the key takeaways was the importance of researching and understanding the limits for each of the services we used.

This goes beyond just learning about quotas within a subscription. Instead, it is about considerations such as the service level agreements (SLAs), throughput options, costs and a plethora of other variables that should and must influence how you design and architect solutions in the cloud. These limits, strengths and weaknesses are magnified when scale and performance are principal requirements of a solution.

Thank you for reading!

Was all this done leveraging consumption plan or app service plan?

Consumption plan. 🙂

Maybe consider using the QueueBatch nuget package in your queue trigger function https://github.com/Scooletz/QueueBatch, which enable batching process instead of one function execution per message, therefore it further enables calling weather API in parallel plus it reduces cost of function.

Thank you for this reference. I’m going to check it out.

Very Informative article. Thanks for sharing

Is there particular reasoning behind using storage queues vs. event hub for the work distribution?

Hi, Nuri. Went with queues because it’s a natural fit for this competing-consumer model and it also scales very quickly for Azure Functions. Event Hubs doesn’t seem like the right fit for the load generation part – we don’t need ordering, message retention and it’s not intended to act like a queue. The scale needs to be organic each time this kicks off (every 15 minutes). If we used Event Hubs the messages would stay there and the scale on the Functions side wouldn’t even come close.

[…] if you’re using the Microsoft cloud and you need to Scale Azure Functions to Make 500,000 Requests to Weather.com in Under 3 minutes, David Barkol has you […]

Is there a reason you didn’t just have the DF activity functions hit the API directly in parallel (fan out/in pattern) instead of going to an intermediate queue?

Going to actually try that next. It was also an experiment to see how fast the function app would scale with a queue trigger. However, the intermediate queues might not even be necessary with your suggestion.

very informative article. thank you for writing it up. I see that these are http triggers. how did you schedule these to run every 15 minutes?

Logic app with recurring trigger

Are u leveraging azure key vault for retrieving ur keys?

Not in this sample, but that is a great idea.

I don’t understand why you went with Durable Functions and the Fan In-Out pattern. Durable function extensions on Azure Functions allow your functions to be stateful. You don’t need that. You also do not need to Fan Back in… there is no need for you to do any processing once the entire batch is completed and you don’t need to combine any of the data together.

That’s really good feedback. I wanted to experiment with generating a lot of messages quickly and thought that fanning out with activities would be an interesting approach. I agree with your points and would definitely do things differently next time. Learned a lot during the process though.

Thanks for the response. I’m exploring a similar design as I have a similar use case, but not related to the weather at all ;).

I’m settled on Azure functions after reviewing the other options. I did a primitive PoC with multiple deployed web jobs and storage queues but I didn’t like the implementation.

I still like the queue based approach with azure functions because it lends it self out to very simple built in scale out of FaaS/PaaS. I’m iterating on the design to see if I can live without the event based queue or not (but I’m still leaning towards it – it’s effective). I’ll update you here if I change the design.

I will add that your approach from a simplicity to implement perspective works really well. All of the queue handling is obscured and done for you. Even though you don’t need it, the statefulness of the durable function probably doesn’t add much unnecessary overhead. To perfect the architecture, you’d need to manage your own storage queues, and set up new functions as listeners to fan-out. You wouldn’t need to fan back in and you would not be maintaining any state either. It’s hard to argue against out of the box Durable Functions from a pure ease of implementation and maintenance perspective.